Introduction

Artificial Intelligence is a trend today. The 21st century experiences Artificial Intelligence as a hot hub in the tech sector. You have seen & used AI on mobile phones, laptops, computers, etc. However, Artificial Intelligence cannot exist alone. There are a certain handful of technologies that support & enable AI.

In this article, we will be learning about edge computing – AI-backed technology in a detailed manner. Let’s begin now.

Cloud Computing – A Centre of AI?

Artificial Intelligence is enjoying a surge of momentum, Cloud Computing tends to become an integral part of its evolution. This resemblance is somewhat strenuous. Why? It is mainly because Cloud-based AI systems process their core AI algorithm at a data center.

- Since the data transfer between the devices and data centers are continuous, there is a problem arising for integrating such a robust artificial intelligence system.

- Cloud computing also lacks latency. The internet connection is not so quick that it can send and process remote data from the data center for intermediate results. A minimal delay is expected. Here comes the issue with the latency. Latency depends on traffic. And the latency decreases with the increase in total number of internet network users.

- When using Cloud computing, highly sensitive information is being accessed by an unknown location. This could be a threat to data as it can reach unauthorized persons.

By now, one must have well-analyzed that Cloud Computing comes with a handful of issues. Here comes the importance of Edge Computing. Below, you will read about the definition of Edge Computing and its importance with Artificial Intelligence.

Also Read: Edge AI Certification

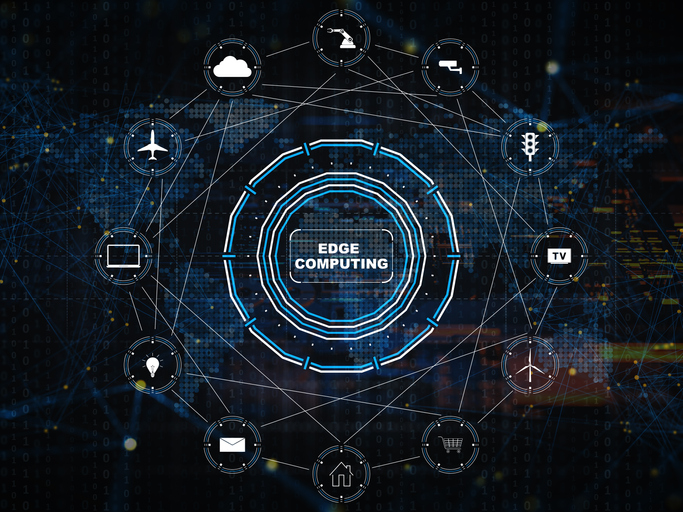

What is Edge Computing?

Edge Computing can be defined as a technology that solves all the problems generated by Artificial Intelligence on the Cloud. Edge Computing assists the execution of AI from the data center to a device.

Presently, the usage of IoT devices has increased significantly. This increase has led to an increase in the bandwidth of the devices. Cloud computing is a centralized computing method but it is not sufficient to support the huge and unending demand for intelligent data processing and smart services.

Here, Edge computing comes as a relief. Cloud computing is a centralized computing method and Edge computing is a distributed computing method. Henceforth, edge computing doesn’t direct itself towards cloud servers, rather it stays at the edge of a particular network.

So, next time you have a question like – Why Edge Computing, remember that Edge computing is only capable of storing computational data close to the user; both with respect to network distance or physical distance.

Benefits of Edge Computing

- Edge Artificial Intelligence has a ton of benefits. First it supports computing that can be executed locally. Second, edge computing supports & enhances latency, and also multiplies speedy access to network information.

- Edge Artificial Intelligence takes AI to the source where both data generation and computation can take place simultaneously.

- The primary aim of Edge computing is to relocate resources, storage capabilities, and computing to the network’s edge.

Why Edge Computing?

Edge computing offers geographic distribution for localized processing. By this, we mean that processing data at the source itself is supported by Edge AI. Today, several IoT devices, applications, and AI benefit massively from this feature of Edge AI. Edge computing supports quick & more accurate data analysis. How? Because it doesn’t move data to centralized locations.

Edge AI offers enhanced proximity to users. How? Edge Computing supports local services and computational resources. Hence users can use network information for correct service usage decisions.

Edge artificial computing supports quick and hassle-free response times. This is possible because of the low latency offered by Edge Computing. Due to the low latency rate, users can process and carry forward resource-intensive computing tasks in a short time frame. This benefit is used by such users who require fast responses to data.

Edge Artificial Intelligence affords low bandwidth usage. As you know that today there is a huge usage of data and the bandwidth of the data generated by several devices is also huge. Thanks to Edge computing for offering us an effective solution to this problem.

Edge AI decreases the distance between the computing device and the data source. This automatically slows down the urgency of long-distance communications between the client and server. All in all, Edge Computing indirectly decreases the bandwidth usage and other latency issues linked to it.

The Final Takeover

Edge Computing is and will gain popularity. In the future, edge artificial intelligence will become an inseparable technology for Artificial Intelligence. With Edge AI, you can expect Single-board Computers usage in the future. And these computers will offer exceptional features for boosted computing.

Concluding, we can say that “YES” Edge Computing is highly important for Artificial Intelligence.